Memory Cache Cleaner

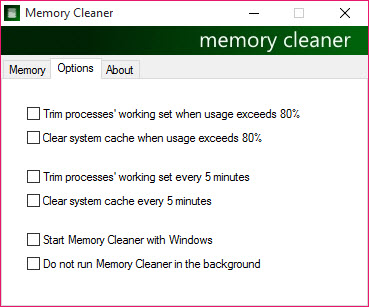

Dec 15, 2018 Memory Cleaner is a freeware software download filed under memory optimizer software and made available by KoshyJohn for Windows. The review for Memory Cleaner has not been completed yet, but it was tested by an editor here on a PC and a list of features has been compiled; see below. Improves the speed on the available memory on your system. Nov 14, 2020 Advanced System Optimizer monitors your memory usage and cleans up your pc memory, increasing performance. Cached memory will take up the memory required for brand new apps, and Memory Cleaner will increase performance by cleanup cached memory. So, when I make changes in a specific page and someone else can’t see them, I should just use “clear the cache” of that page; but if I make changes to a couple of pages or more, I should either clear cache of each separately or use “delete cache”, which would let them see the changes in the whole site.

Like every operating system, Windows also stores different kinds of cache files on the hard drive. In simple words, cache files are temporary files that are stored on the memory to make it easier for you to access frequently used data. The cache data utilize lots of hard drive space of your computer. Deleting cache files is necessary for the smooth running of the system. Cache memory is responsible for various kinds of computer problems, including slow processing speed, slow startup, lag or hang, application responding, and many others. If you don't know how to clear memory cache on your Windows computer then, the solution to your problem is here. Here in this post, we will talk about How to clear memory cache on Windows computers.

5 Ways to Clear Memory Cache on Windows 10/8/7/Vista/XP

It is necessary to clear cache the memory to fully utilize your CPU power. Cache memory causes lots of problems by occupying a huge amount of computer RAM (Random Access Memory). That's why it is always recommended to clearing memory cache from your Windows computer to get optimum performance. Cache memory is something that is really useful to give you better performance. It helps the application to load faster as compared to load the app without cache data. Here below are some methods that will help you to clear memory cache in Windows 10/8/7/Vista/ and XP.

Method 1: Create Clear Memory Cache Shortcut

This is one of the easiest solutions to clear memory cache on the Windows operating system. This method is completely free; you don't have to install any third-party paid tool to clear the memory cache. Below are some steps to clear memory cache on Windows.

Step 1: First of all, you have to turn on your computer and right-click on the desktop.

Step 2: Here, you will have a couple of options. Click on 'Shortcut' under the New option.

Step 3: Create a Shortcut wizard will appear, you have to click Browse to locate the directory. Or you can simply type '%windir%system32rundll32.exe advapi32.dll, ProcessIdleTasks' without quotes on the text field of 'Type the location of the item'.

Step 4: Once the above process is completed, you have to click on 'Next' button to proceed to the next step.

Step 5: Now, you have to type a name for this shortcut. Here, we are typing 'Clear Unused RAM' and hit on the 'Next' button.

Once the shortcut is created, switch to the desktop and double-click on the shortcut to clear memory cache on Windows.

Method 2: Clear General Cache

Disk Cleanup is an integrated feature of Microsoft Windows that enables the user to clean unwanted files. Getting engaging with this feature, you can remove temporary files, unwanted files, preview windows installation files, and much more. You just have to choose the disk and start the deleting process. Here below are steps to clear the memory cache using disk cleanup.

Step 1: At the first step, you have to click on the 'Start' button and type 'disk cleanup' in the search field and hit the 'Enter' button.

Step 2: Now, Disk Cleanup: Drive Selection dialog box will appear. You have to choose the drive from where you would like to clear the memory cache.

Step 3: Now, click on the 'OK' button. It will start the scanning process and shows you how much space is occupied by the cache.

Step 4: You have to tick the checkbox which you would like to clean. If you want to clean system files then, you can click on the 'Clean up system files' otherwise, hit on the 'OK' button at the bottom of the window.

Step 5: Once the above process is completed, you can click on 'Delete Files' if prompted. It will start deleting cache files from places including recycling bin, Thumbnails, and many other places.

Method 3: Clear App Data File to Clear Memory Cache

Step 1: At the first step, you have to click on the 'Start' button or hit on the 'Windows' key and click on 'Computers' to open My Computer.

Step 2: My Computer window will appear; if you don't see My Computer, then manually type 'My Computer' in the start and hit the 'Enter' button.

Step 3: Once the above process is completed, you have to click on the 'Organize' tab in the top left corner of the window.

Step 4: Then, choose 'Folder and search options' under the organize drop-down menu.

Step 5: a Folder options dialog box will appear with 3 tabs; click on the view tab.

Step 6: Now, you have to click on 'Show Hidden files, folder, and drives' radio button under the 'Hidden files and folders'.

Step 7: Click on the 'OK' button at the button of the window.

Step 8: Once the above process is successfully completed, you have to open the hard disk where windows are installed.

Step 9: Now, you have to open the 'Users' folder by double-clicking on it. Then, click on the folder with an administrator account.

Step 10: You have to locate the 'AppData' folder and open it by double-clicking and open the 'Local' folder.

Step 11: Now, you have to select the 'Temp' folder and open it.

Step 12: Remove the read-only permissions from the Temp folder.

Step 13: You have to select all files that are placed in the Temp folder. You can press 'Ctrl' + 'A' to select all files.

Step 14: Once the files are selected, Press 'Shift' + 'Delete' button simultaneously to permanently delete all temp files.

Method 4: Delete Internet Explorer Files to Clear Memory Cache

Step 1: First of all, click on the 'Start' button and type 'Internet Explorer' in the text field and press the 'Enter' button.

Step 2: Internet Explorer web browser window will appear, you have to click on the 'General' tab at the top of the window.

Step 3: Now, you have to click on the 'Settings' under the browsing history section.

Step 4: Then, click on 'View files' at the screen's bottom right side. Once the above process is completed, a new window will appear with all cached files created by Internet Explorer.

Step 5: You have to press the 'Ctrl' + 'A' button simultaneously to select all the cache files.

Step 6: Now, you have to press the 'Shift' + 'Delete' button to remove all cached files permanently.

Method 5: Clear the DNS Cache

Step 1: Initially, you have to click on the 'Start' menu by clicking on the Start button at the bottom left of the screen.

Step 2: Now, type command prompt and hit the Enter button to launch. You can also open a command prompt by right click on Command prompt and run as administrator.

Step 3: On the command prompt, you have to type 'ipconfig /flushdns' without quotes and press the'Enter' button.

Memory Cache Cleaner

Step 4: You have to wait for a couple of seconds and be notified when completed. Once it is successfully completed, you have to restart your computer to makes changes.

By Rick Anderson, John Luo, and Steve Smith

View or download sample code (how to download)

Caching basics

Caching can significantly improve the performance and scalability of an app by reducing the work required to generate content. Caching works best with data that changes infrequently and is expensive to generate. Caching makes a copy of data that can be returned much faster than from the source. Apps should be written and tested to never depend on cached data.

ASP.NET Core supports several different caches. The simplest cache is based on the IMemoryCache. IMemoryCache represents a cache stored in the memory of the web server. Apps running on a server farm (multiple servers) should ensure sessions are sticky when using the in-memory cache. Sticky sessions ensure that subsequent requests from a client all go to the same server. For example, Azure Web apps use Application Request Routing (ARR) to route all subsequent requests to the same server.

Non-sticky sessions in a web farm require a distributed cache to avoid cache consistency problems. For some apps, a distributed cache can support higher scale-out than an in-memory cache. Using a distributed cache offloads the cache memory to an external process.

The in-memory cache can store any object. The distributed cache interface is limited to byte[]. The in-memory and distributed cache store cache items as key-value pairs.

System.Runtime.Caching/MemoryCache

System.Runtime.Caching/MemoryCache (NuGet package) can be used with:

- .NET Standard 2.0 or later.

- Any .NET implementation that targets .NET Standard 2.0 or later. For example, ASP.NET Core 2.0 or later.

- .NET Framework 4.5 or later.

Microsoft.Extensions.Caching.Memory/IMemoryCache (described in this article) is recommended over System.Runtime.Caching/MemoryCache because it's better integrated into ASP.NET Core. For example, IMemoryCache works natively with ASP.NET Core dependency injection.

Use System.Runtime.Caching/MemoryCache as a compatibility bridge when porting code from ASP.NET 4.x to ASP.NET Core.

Cache guidelines

- Code should always have a fallback option to fetch data and not depend on a cached value being available.

- The cache uses a scarce resource, memory. Limit cache growth:

- Do not use external input as cache keys.

- Use expirations to limit cache growth.

- Use SetSize, Size, and SizeLimit to limit cache size. The ASP.NET Core runtime does not limit cache size based on memory pressure. It's up to the developer to limit cache size.

Use IMemoryCache

Warning

Using a shared memory cache from Dependency Injection and calling SetSize, Size, or SizeLimit to limit cache size can cause the app to fail. When a size limit is set on a cache, all entries must specify a size when being added. This can lead to issues since developers may not have full control on what uses the shared cache. For example, Entity Framework Core uses the shared cache and does not specify a size. If an app sets a cache size limit and uses EF Core, the app throws an InvalidOperationException.When using SetSize, Size, or SizeLimit to limit cache, create a cache singleton for caching. For more information and an example, see Use SetSize, Size, and SizeLimit to limit cache size.A shared cache is one shared by other frameworks or libraries. For example, EF Core uses the shared cache and does not specify a size.

In-memory caching is a service that's referenced from an app using Dependency Injection. Request the IMemoryCache instance in the constructor:

The following code uses TryGetValue to check if a time is in the cache. If a time isn't cached, a new entry is created and added to the cache with Set. The CacheKeys class is part of the download sample.

The current time and the cached time are displayed:

The following code uses the Set extension method to cache data for a relative time without creating the MemoryCacheEntryOptions object.

The cached DateTime value remains in the cache while there are requests within the timeout period.

The following code uses GetOrCreate and GetOrCreateAsync to cache data.

The following code calls Get to fetch the cached time:

The following code gets or creates a cached item with absolute expiration:

A cached item set with a sliding expiration only is at risk of becoming stale. If it's accessed more frequently than the sliding expiration interval, the item will never expire. Combine a sliding expiration with an absolute expiration to guarantee that the item expires once its absolute expiration time passes. The absolute expiration sets an upper bound to how long the item can be cached while still allowing the item to expire earlier if it isn't requested within the sliding expiration interval. When both absolute and sliding expiration are specified, the expirations are logically ORed. If either the sliding expiration interval or the absolute expiration time pass, the item is evicted from the cache.

The following code gets or creates a cached item with both sliding and absolute expiration:

The preceding code guarantees the data will not be cached longer than the absolute time.

GetOrCreate, GetOrCreateAsync, and Get are extension methods in the CacheExtensions class. These methods extend the capability of IMemoryCache.

MemoryCacheEntryOptions

The following sample:

- Sets a sliding expiration time. Requests that access this cached item will reset the sliding expiration clock.

- Sets the cache priority to CacheItemPriority.NeverRemove.

- Sets a PostEvictionDelegate that will be called after the entry is evicted from the cache. The callback is run on a different thread from the code that removes the item from the cache.

Use SetSize, Size, and SizeLimit to limit cache size

A MemoryCache instance may optionally specify and enforce a size limit. The cache size limit does not have a defined unit of measure because the cache has no mechanism to measure the size of entries. If the cache size limit is set, all entries must specify size. The ASP.NET Core runtime does not limit cache size based on memory pressure. It's up to the developer to limit cache size. The size specified is in units the developer chooses.

For example:

- If the web app was primarily caching strings, each cache entry size could be the string length.

- The app could specify the size of all entries as 1, and the size limit is the count of entries.

If SizeLimit isn't set, the cache grows without bound. The ASP.NET Core runtime doesn't trim the cache when system memory is low. Apps must be architected to:

- Limit cache growth.

- Call Compact or Remove when available memory is limited:

The following code creates a unitless fixed size MemoryCache accessible by dependency injection:

SizeLimit does not have units. Cached entries must specify size in whatever units they deem most appropriate if the cache size limit has been set. All users of a cache instance should use the same unit system. An entry will not be cached if the sum of the cached entry sizes exceeds the value specified by SizeLimit. If no cache size limit is set, the cache size set on the entry will be ignored.

The following code registers MyMemoryCache with the dependency injection container.

MyMemoryCache is created as an independent memory cache for components that are aware of this size limited cache and know how to set cache entry size appropriately.

The following code uses MyMemoryCache:

The size of the cache entry can be set by Size or the SetSize extension methods:

MemoryCache.Compact

MemoryCache.Compact attempts to remove the specified percentage of the cache in the following order:

- All expired items.

- Items by priority. Lowest priority items are removed first.

- Least recently used objects.

- Items with the earliest absolute expiration.

- Items with the earliest sliding expiration.

Pinned items with priority NeverRemove are never removed. The following code removes a cache item and calls Compact:

See Compact source on GitHub for more information.

Cache dependencies

The following sample shows how to expire a cache entry if a dependent entry expires. A CancellationChangeToken is added to the cached item. When Cancel is called on the CancellationTokenSource, both cache entries are evicted.

Using a CancellationTokenSource allows multiple cache entries to be evicted as a group. With the using pattern in the code above, cache entries created inside the using block will inherit triggers and expiration settings.

Additional notes

- Expiration doesn't happen in the background. There is no timer that actively scans the cache for expired items. Any activity on the cache (

Get,Set,Remove) can trigger a background scan for expired items. A timer on theCancellationTokenSource(CancelAfter) also removes the entry and triggers a scan for expired items. The following example uses CancellationTokenSource(TimeSpan) for the registered token. When this token fires it removes the entry immediately and fires the eviction callbacks:

When using a callback to repopulate a cache item:

- Multiple requests can find the cached key value empty because the callback hasn't completed.

- This can result in several threads repopulating the cached item.

When one cache entry is used to create another, the child copies the parent entry's expiration tokens and time-based expiration settings. The child isn't expired by manual removal or updating of the parent entry.

Use PostEvictionCallbacks to set the callbacks that will be fired after the cache entry is evicted from the cache.

For most apps,

IMemoryCacheis enabled. For example, callingAddMvc,AddControllersWithViews,AddRazorPages,AddMvcCore().AddRazorViewEngine, and many otherAdd{Service}methods inConfigureServices, enablesIMemoryCache. For apps that are not calling one of the precedingAdd{Service}methods, it may be necessary to call AddMemoryCache inConfigureServices.

Background cache update

Use a background service such as IHostedService to update the cache. The background service can recompute the entries and then assign them to the cache only when they’re ready.

Additional resources

By Rick Anderson, John Luo, and Steve Smith

View or download sample code (how to download)

Caching basics

Caching can significantly improve the performance and scalability of an app by reducing the work required to generate content. Caching works best with data that changes infrequently. Caching makes a copy of data that can be returned much faster than from the original source. Code should be written and tested to never depend on cached data.

ASP.NET Core supports several different caches. The simplest cache is based on the IMemoryCache, which represents a cache stored in the memory of the web server. Apps that run on a server farm (multiple servers) should ensure that sessions are sticky when using the in-memory cache. Sticky sessions ensure that later requests from a client all go to the same server. For example, Azure Web apps use Application Request Routing (ARR) to route all requests from a user agent to the same server.

Non-sticky sessions in a web farm require a distributed cache to avoid cache consistency problems. For some apps, a distributed cache can support higher scale-out than an in-memory cache. Using a distributed cache offloads the cache memory to an external process.

The in-memory cache can store any object. The distributed cache interface is limited to byte[]. The in-memory and distributed cache store cache items as key-value pairs.

System.Runtime.Caching/MemoryCache

System.Runtime.Caching/MemoryCache (NuGet package) can be used with:

- .NET Standard 2.0 or later.

- Any .NET implementation that targets .NET Standard 2.0 or later. For example, ASP.NET Core 2.0 or later.

- .NET Framework 4.5 or later.

Microsoft.Extensions.Caching.Memory/IMemoryCache (described in this article) is recommended over System.Runtime.Caching/MemoryCache because it's better integrated into ASP.NET Core. For example, IMemoryCache works natively with ASP.NET Core dependency injection.

Use System.Runtime.Caching/MemoryCache as a compatibility bridge when porting code from ASP.NET 4.x to ASP.NET Core.

Cache guidelines

- Code should always have a fallback option to fetch data and not depend on a cached value being available.

- The cache uses a scarce resource, memory. Limit cache growth:

- Do not use external input as cache keys.

- Use expirations to limit cache growth.

- Use SetSize, Size, and SizeLimit to limit cache size. The ASP.NET Core runtime does not limit cache size based on memory pressure. It's up to the developer to limit cache size.

Using IMemoryCache

Warning

Using a shared memory cache from Dependency Injection and calling SetSize, Size, or SizeLimit to limit cache size can cause the app to fail. When a size limit is set on a cache, all entries must specify a size when being added. This can lead to issues since developers may not have full control on what uses the shared cache. For example, Entity Framework Core uses the shared cache and does not specify a size. If an app sets a cache size limit and uses EF Core, the app throws an InvalidOperationException.When using SetSize, Size, or SizeLimit to limit cache, create a cache singleton for caching. For more information and an example, see Use SetSize, Size, and SizeLimit to limit cache size.

In-memory caching is a service that's referenced from your app using Dependency Injection. Call AddMemoryCache in ConfigureServices:

Request the IMemoryCache instance in the constructor:

IMemoryCache requires NuGet package Microsoft.Extensions.Caching.Memory, which is available in the Microsoft.AspNetCore.App metapackage.

The following code uses TryGetValue to check if a time is in the cache. If a time isn't cached, a new entry is created and added to the cache with Set.

The current time and the cached time are displayed:

The cached DateTime value remains in the cache while there are requests within the timeout period. The following image shows the current time and an older time retrieved from the cache:

The following code uses GetOrCreate and GetOrCreateAsync to cache data.

Internal Cache

The following code calls Get to fetch the cached time:

GetOrCreate , GetOrCreateAsync, and Get are extension methods part of the CacheExtensions class that extends the capability of IMemoryCache. See IMemoryCache methods and CacheExtensions methods for a description of other cache methods.

MemoryCacheEntryOptions

The following sample:

Free Cache Cleaner

- Sets a sliding expiration time. Requests that access this cached item will reset the sliding expiration clock.

- Sets the cache priority to

CacheItemPriority.NeverRemove. - Sets a PostEvictionDelegate that will be called after the entry is evicted from the cache. The callback is run on a different thread from the code that removes the item from the cache.

Use SetSize, Size, and SizeLimit to limit cache size

A MemoryCache instance may optionally specify and enforce a size limit. The cache size limit does not have a defined unit of measure because the cache has no mechanism to measure the size of entries. If the cache size limit is set, all entries must specify size. The ASP.NET Core runtime does not limit cache size based on memory pressure. It's up to the developer to limit cache size. The size specified is in units the developer chooses.

For example:

- If the web app was primarily caching strings, each cache entry size could be the string length.

- The app could specify the size of all entries as 1, and the size limit is the count of entries.

If SizeLimit is not set, the cache grows without bound. The ASP.NET Core runtime does not trim the cache when system memory is low. Apps much be architected to:

Cache Memory Cleaner For Windows 10

- Limit cache growth.

- Call Compact or Remove when available memory is limited:

The following code creates a unitless fixed size MemoryCache accessible by dependency injection:

SizeLimit does not have units. Cached entries must specify size in whatever units they deem most appropriate if the cache size limit has been set. All users of a cache instance should use the same unit system. An entry will not be cached if the sum of the cached entry sizes exceeds the value specified by SizeLimit. If no cache size limit is set, the cache size set on the entry will be ignored.

The following code registers MyMemoryCache with the dependency injection container.

MyMemoryCache is created as an independent memory cache for components that are aware of this size limited cache and know how to set cache entry size appropriately.

The following code uses MyMemoryCache:

The size of the cache entry can be set by Size or the SetSize extension method:

MemoryCache.Compact

MemoryCache.Compact attempts to remove the specified percentage of the cache in the following order:

- All expired items.

- Items by priority. Lowest priority items are removed first.

- Least recently used objects.

- Items with the earliest absolute expiration.

- Items with the earliest sliding expiration.

Pinned items with priority NeverRemove are never removed.

See Compact source on GitHub for more information.

Cache dependencies

The following sample shows how to expire a cache entry if a dependent entry expires. A CancellationChangeToken is added to the cached item. When Cancel is called on the CancellationTokenSource, both cache entries are evicted.

Using a CancellationTokenSource allows multiple cache entries to be evicted as a group. With the using pattern in the code above, cache entries created inside the using block will inherit triggers and expiration settings.

Additional notes

When using a callback to repopulate a cache item:

- Multiple requests can find the cached key value empty because the callback hasn't completed.

- This can result in several threads repopulating the cached item.

When one cache entry is used to create another, the child copies the parent entry's expiration tokens and time-based expiration settings. The child isn't expired by manual removal or updating of the parent entry.

Use PostEvictionCallbacks to set the callbacks that will be fired after the cache entry is evicted from the cache.

Background cache update

Use a background service such as IHostedService to update the cache. The background service can recompute the entries and then assign them to the cache only when they’re ready.

Additional resources